About Me

Archive

- Blackout

- Faster Than Light

- Hex Board

- Invariants

- Listening To OEIS

- Logic Gates

- Penrose Maze

- Syntactic Sugar

- Terminal Colors

Notes

Puzzles

Tree Editors

Programming

- Imagining a Language without Booleans

- Typst as a Language

- A Twist on Wadler's Printer

- Preventing Log4j with Capabilities

- Algebra and Data Types

- Pixel to Hex

- Linear vs Binary Search

Physics

Math

- Traffic Engineering with Portals, Part II

- Traffic Engineering with Portals

- Algebra and Data Types

- What's a Confidence Interval?

- Imagining a Language without Booleans

- JJ Cheat Sheet

- Typst as a Language

- A Twist on Wadler's Printer

- Space Logistics

- Hilbert's Curve

- Preventing Log4j with Capabilities

- Traffic Engineering with Portals, Part II

- Traffic Engineering with Portals

- Algebra and Data Types

- What's a Confidence Interval?

- Uncalibrated quantum experiments act clasically

- Pixel to Hex

- Linear vs Binary Search

- There and Back Again

- Tree Editor Survey

- Rust Quick Reference

- The Prisoners' Lightbulb

- Notes on Concurrency

- It's a blog now!

All Posts

Uncalibrated quantum experiments act clasically

Don’t read this post. In this post, I stumble around vaguely in the direction of predicting that density matrices are a thing. While I kind of like that I figured this out on my own, it is a standard and well known part of quantum mechanics, and you’ll find a much clearer introduction in a quantum mechanics class. (Maybe not the intro class; mine didn’t cover it or this post wouldn’t be here; but it’s not super advanced.)

Here’s a pretty obvious fact from probability theory:

Let A and B be two disjoint events, meaning that at most one of them can happen. For example, if you flip a coin it might land heads, or it might land tails, or it might do neither, perhaps slipping into a heating duct never to be seen again. But it definitely won’t come up both heads and tails. Then:

P(A or B) = P(A) + P(B)

One of the strange aspects of quantum mechanics is that this fact does not hold in a quantum system.

In a quantum system, an event does not directly have a probability: a real number between 0 and 1. Rather, it has an amplitude: a complex number of magnitude at most 1.

What’s the meaning of this amplitude? The Born rule says that the probability that an event will be

observed is the square of the magnitude of the amplitude of that event. Labeling this function

Born(α), we have:

Born(α) = |α|²

The strange fact from quantum mechanics is that when there are two ways, A and B, for something to

happen, and you want to know the probability that it happens, you don’t add the probabilities of A

and B together. You add their amplitudes. For example, if A has amplitude i/2 and B has

amplitude -i/2, then (A or B) has amplitude 0. Looking at their probabilities (obtained via the

Born rule), we get this:

P(A) = |i/2|² = 1/4

P(B) = |-i/2|² = 1/4

P(A or B) = |i/2 - i/2|² = 0

We combined two events that could happen, to get one that can’t!

However, in this post I’d like to explain how in a poorly calibrated experiment, this strange quantum behavior will vanish and be replaced with ordinary probability. First I’ll give the basic explanation. Then I’ll follow up with an analysis of a experiment.

(I’ve been using sloppy language. You wouldn’t normally call events A and B disjoint, nor use the word “or”. But if you look at the setup of an actual experiment that leads to this, and describe it with ordinary language, “disjoint” and “or” are absolutely fair words to use to describe the situation. I think the reason we avoid them when talking about quantum mechanics is because this behavior is so strange.)

What happens if you don’t calibrate

In a quantum mechanical experiment, the phase of an amplitude can be very sensitive to the

placement of the parts you use. (The phase of a complex number, when it is expressed in polar

coordinates, is its angle.) For example, if you’re dealing with a laser, then the phase of the

amplitude you get will depend on the distance the laser travels, relative to the wavelength of the

light. For example, if the distance is off by half a wavelength (which is perhaps around 300nm),

then the amplitude will change by a factor of -1!

My hypothesis in this post is that if you don’t calibrate your experiment properly, then the phases of the amplitudes will be unpredictable. And sufficiently so that it’s accurate to model each phase as an independent and uniformly random variable.

Taking the above example, this means that instead of (A or B) having a combined amplitude of

i/2 - i/2, it would have a combined amplitude of:

si/2 - ti/2

where s and t are independent and uniformly random complex variables of magnitude 1.

This is an unusual kind of model: we have a probability distribution over a quantum amplitude. But that’s exactly what we should have! We’re uncertain about the phases, and when you’re uncertain about something you model it with a probability distribution.

However, what we care about here is the probability, rather than the combined amplitude. To find it, we should take the expected value of the Born rule applied to the amplitude:

E[Born(si/2 - ti/2)]

Remember that s and t are uniformly random variables of magnitude 1. We can simplify this

expression:

E[Born(si/2 - ti/2)]

= E[Born(s/2 - t/2)] // by symmetry

= E[Born(s/2 - 1/2)] // by further symmetry

= E[|s/2 - 1/2|²] // using the Born rule

This is the average squared distance from the origin to a random point on a circle of radius 1/2

centered at -1/2. You can solve this with an integral, and you get 1/2.

Which suggests that the strange quantum-mechanical behavior has vanished! We now have this, which looks just like ordinary probability:

P(A) = 1/4

P(B) = 1/4

P(A or B) = 1/2

And indeed, if instead of starting with amplitudes i/2 and -i/2, we start with an arbitrary α

and β, you can do the same math and get:

P(A) = |α|²

P(B) = |β|²

P(A or B) = E[|sα + tβ|²] = |α|² + |β|²

That is, the probabilities of disjoint events add again. We started with the rules of quantum mechanics, and obtained a rule from ordinary probability theory.

Possible explanation of the Born rule?

This feels to me like it’s close to an explanation of the Born rule. The explanation would go like this:

In our big, messy, classical world we’re never certain of the phase of any amplitude. And in the small, precise world of carefully crafted quantum experiments, we pin down the phases precisely. The purpose of the Born rule is to describe the boundary between these two models of the world. And the Born rule turns a deterministic quantum model (which only has amplitudes, not probabilities), into a probabilistic classical model. To justify this leap, we should show that (i) there is legitimate uncertainty that cannot be eliminated by a better model, and (ii) that the laws of probability theory hold in our proposed probabilistic model. For (i), we are legitimately uncertain about the phases of the amplitudes, and there is no feasible way to fix this. For (ii), I have given an argument that disjoint probabilities add under the Born rule, which is the most important of the laws of probability theory.

The trouble with this exaplanation is that it’s only appropriate to add amplitudes together in particular situations, and phases are only random in particular situations, and it’s not clear whether these sets of situations overlap much.

The rest of the post

In the rest of this post, I work through a particular experiment (similar to a Mach-Zehnder interferometer), showing in more detail how you can get into the above situation of adding amplitudes that have random phases. To give an outline:

- I describe three experiments with partially-silvered mirrors. Importantly, they are uncalibrated, i.e. their components are not precisely placed. I give a precise definition of what counts as “uncalibrated”.

- I show that using the Born rule

|α|², the outcome of the experiment can be predicted with classical probability, but using the (incorrect, but plausible) alternative rule|α|, it cannot be.

The Experiments

The three experiments use a single-photon light source, partially-silvered mirrors, and a photon detector. The second and third experiments are minor variations on the first. In every experiment, what the experimenter cares about is whether the detector beeps.

Background: Partially-silvered mirrors

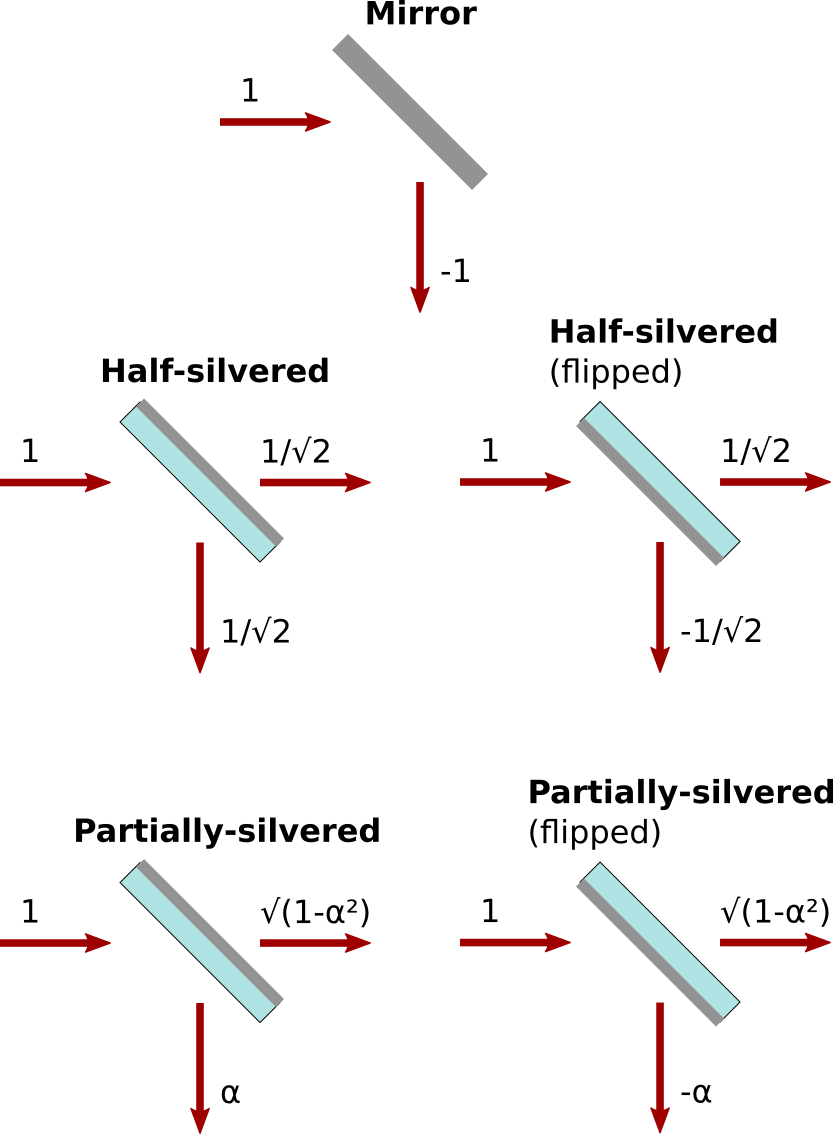

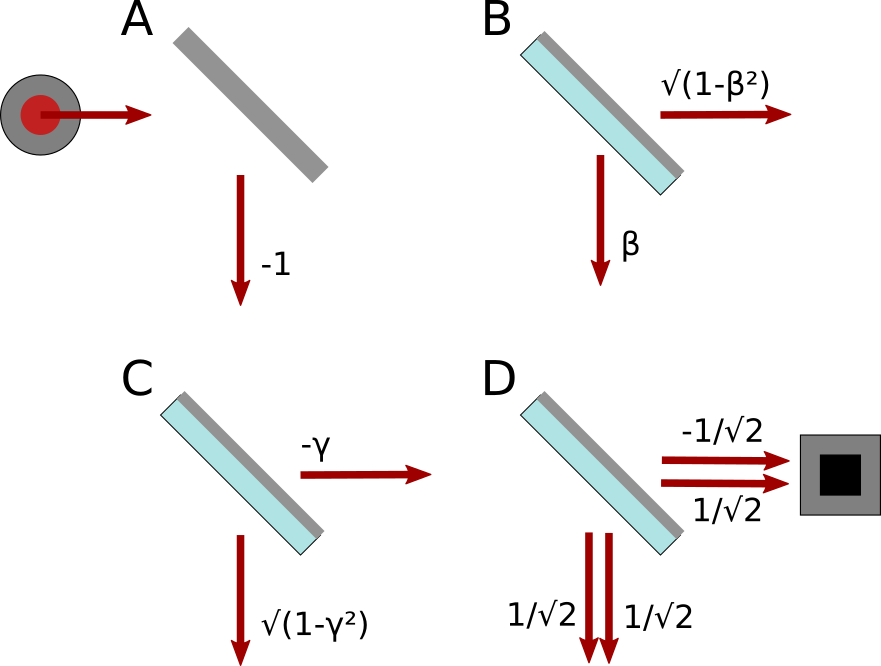

Here are diagrams for how a photon interacts with a partially silvered mirror. It can either go straight through or reflect off, and the amplitude of each possibility depends on how silvered the mirror is (an unsilvered mirror would just be glass), and how it is oriented:

(The amplitude α is an unspecified real number between 0 and 1. If it’s 1/√2, then the mirror is

half-silvered. If α is smaller it’s less than half-silvered, and if α is bigger it’s more than

half-silvered.)

Let’s work through an example of how to use these diagrams to predict the outcome of an experiment made up of some mirrors, a coherent light source (a laser), and a photon detector. To determine the probability that the detector beeps, you should:

- Consider all paths from the light source to the detector.

- Determine the amplitude of each path, by multiplying the amplitudes of each of its steps.

- To determine the amplitude of the outcome in which the detector beeps, add together the amplitudes of each path that leads to the detector.

- To determine the probability of the outcome, you use the Born rule: take the absolute value of the amplitude and square it.

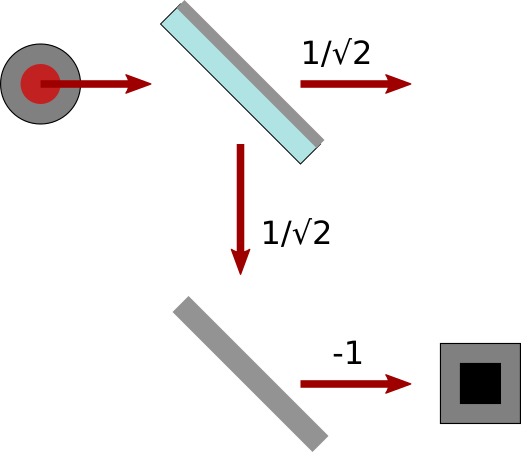

So those are the rules. Let’s apply them to a simple example experiment:

Applying the rules:

- There’s only one path that leads to the detector. It goes right, down, right.

- We multiply the amplitudes we see along the path (

1/√2and-1) to get-1/√2. - If there were other paths, we’d add their contributions too. But there’s only one so our total

amplitude is

-1/√2. - The probability of the detector beeping is

|-1/√2|² = 1/2. That’s why this kind of mirror is called half-silvered.

That’s all there is to it.

First experiment: Uncalibrated Mach-Zehnder interferometer

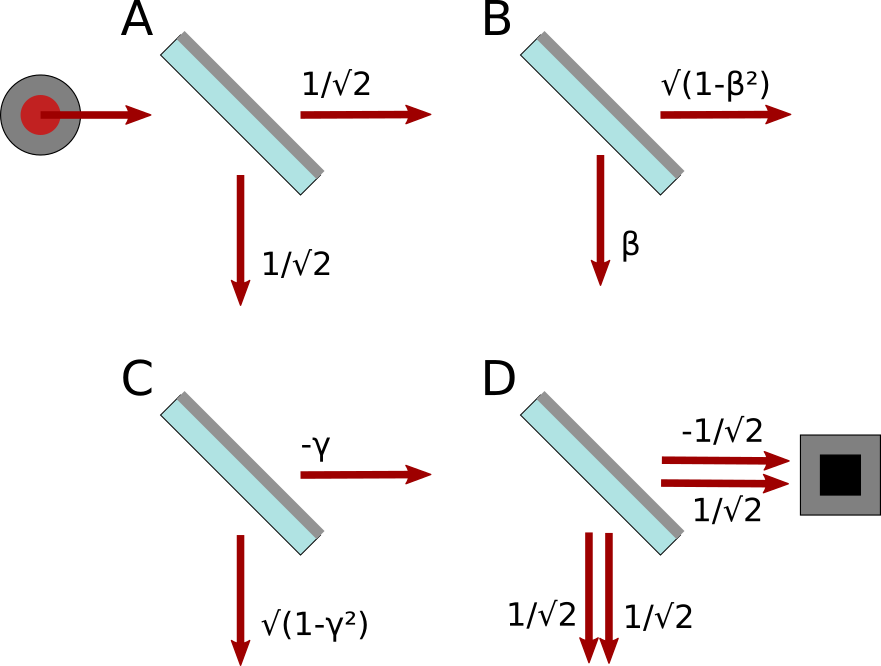

Here’s the setup of the first experiment:

Note that B and C are partially silvered, but need not be exactly half-silvered. I call their

amplitude of reflection β and -γ respectively. There’s one tricky thing going on here, on the

path from D to the detector. This amplitude is either -1/√2 or 1/√2, depending on whether the

incoming photon came from B or C, respectively. (This follows from the diagrams I gave for the

mirrors. I’m not just making it up!)

(If you know what a Mach-Zehnder interferometer is, this setup is the same except that B and C are only partially-silvered, allowing the photon to sometimes escape into the air.)

There’s one other crucial feature for this setup, which is the whole reason for this post. It must be strongly uncalibrated. I’ll give a precise definition in a second, but first an intuitive one.

A setup like this is extremely sensitive to the placement of its parts. It’s sensitive to their placement on the scale of the wavelength of the light being sent through them, which for red light is about 700nm. Specifically, a photon’s phase continuously changes as it travels. Every 700nm (for red light), it goes full circle. So if the length between two of the mirrors is off by 350nm, that will multiply the amplitude by -1. If it’s off by 175nm, that will multiply the amplitude by i. You might expect this experiment to be finicky. You’d be right.

I’m going to assume that this setup was not carefully calibrated, its parts are not fastened down, and as a result the phase of a photon taking any path is, for all practical purposes, uniformly random. More precisely:

Strong Uncalibration Assumption: The phase change of a photon travelling through the apparatus can be accurated modelled as uniformly random. Furthermore, the phase change of a photon is indepdendent from one run of the experiment to the next, and the phase change along the top path is independent of the phase change along the bottom path.

Honestly, I’m not sure how realistic this assumption is; I’m relying on intuition here. But if it does hold, it gives a very satisfying account of how quantum mechanics degenerates into ordinary probability when things are uncalibrated. I’d love to hear from a physicist on the matter.

Calculating the amplitude

Let’s see how that works. First, what is the amplitude that the detector beeps?

There are two paths to consider: A-B-D-detector, and A-C-D-detector. Their contributions are:

Top path (A-B-D): 1/√2 * β * -1/√2 = -β/2

Bottom path (A-C-D): 1/√2 * -γ * 1/√2 = -γ/2

Total: -β/2 - γ/2

However, that is only true if the experiment is perfectly calibrated. We are assuming instead that it is uncalibrated, and that the phases of the paths can be modeled as uniformly random.

I will introduce some notation to make it more convenient to discuss uniformly random phase:

⊙ₛ(f(s)) ≜ probability distribution of f(e^iθ) / 2π

where θ is chosen uniformly from 0..2π

In other words, ⊙ₛ(f(s)) is a random amplitude which depends on s, an amplitude chosen uniformly

at random from the unit circle.

For example:

⊙ₛ(s)is a uniformly random amplitude with magnitude 1.⊙ₛ(s/2 - i/4)is an amplitude chosen uniformly at random from a circle with radius1/2centered ati/4.

Using this notation, we can correct our calculation of the total amplitude by accounting for the random phases caused by the uncalibrated setup:

AMPLITUDE1 = ⊙ₛ⊙ₜ(-sβ/2 - tγ/2)

(I’m calling this AMPLITUDE1, as it’s the result of the first experiment.)

Because the negative signs make no difference when multiplied by a uniformly random phase, this becomes:

AMPLITUDE1 = ⊙ₛ⊙ₜ(sβ/2 + tγ/2)

Second experiment:

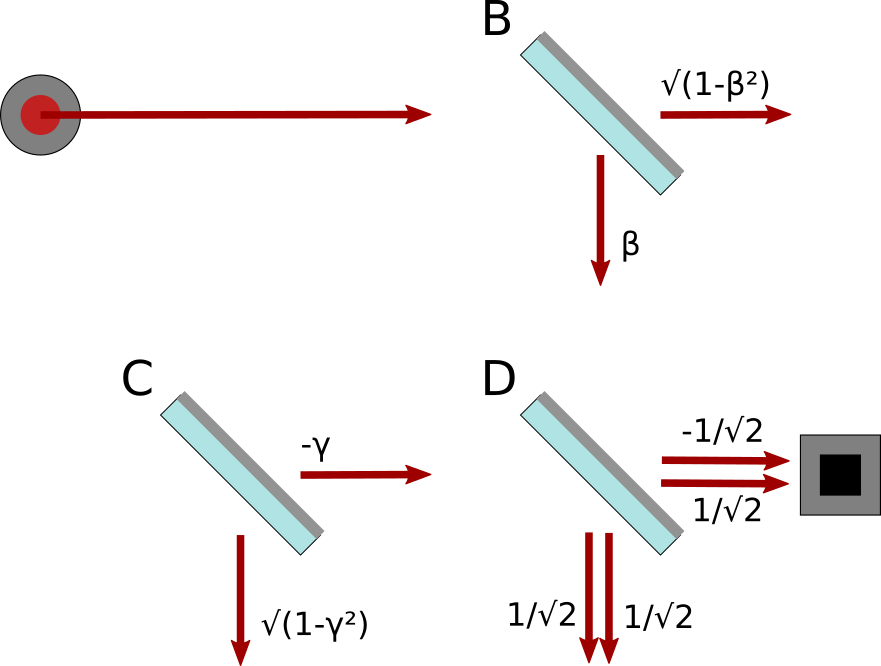

The second experiment is like the first, but with half-silvered mirror A removed:

With this setup, there is only one path to consider, A-B-D-detector. The amplitude of this path (removing the unnecessary negative sign):

AMPLITUDE2 = ⊙ₛ(sβ/√2)

Third experiment:

The third experiment is like the first, but with the half-silvered mirror at A replaced with a full mirror:

Now there is only the path A-C-D-detector, with amplitude:

AMPLITUDE3 = ⊙ₛ(sγ/√2)

These uncalibrated experiments act clasically

Using the Born rule, we can determine the probability of the photon being detected in each of the three experiments. Since in each case we have a probability distribution over a quantum amplitude, we will take the expected value of the square of the absolute value of the amplitude:

E[|AMPLITUDE1|²] = E[⊙ₛ⊙ₜ(|sβ/2 + tγ/2|²)] = (|β|² + |γ|²)/4

E[|AMPLITUDE2|²] = E[⊙ₛ(|sβ/√2|²)] = |β|²/2

E[|AMPLITUDE2|²] = E[⊙ₛ(|sγ/√2|²)] = |γ|²/2

(I omit the proofs, but have evaluated these integrals by hand and checked the results with Wolfram Alpha.)

This is classical behavior! Naive classical reasoning would proceed as follows:

- In the first experiment, the photon hits the half-silvered mirror at A, and either goes straight or reflects down with equal probability.

- If it goes straight through A, then it has the same chance of hitting the detector as the photon in the second experiment.

- If it reflects off of A, then it has the same chance of hitting the detector as the photon in the third experiment.

- Thus the probability of the detector going off in the first experiment is the average of the probability that it goes off in the second and the probability that it goes off in the third.

Which matches the conclusion: (|β|² + |γ|²)/4 is the average of |β|²/2 and |γ|²/2.

We can also work backwards, and take it as a given that this uncalibrated experiment should act clasically. Doing so yields an equation that must constrain the Born rule:

2*E[⊙ₛ⊙ₜBorn(sβ/2 + tγ/2)] = E[⊙ₛBorn(sβ/√2)] + E[⊙ₜBorn(sγ/√2)]

One obvious alternative to the Born rule is to simply take the magnitude of the amplitude, |α|

(why would you square it?). However, doing so violates the above equation, so it would not produce

classical probability. I’m not sure if this equation has a unique solution, but it is at least quite

restrictive.